Publication

[J-5] T. Bhattacharjee, G. Lee, H. Song, S. S. Srinivasa, "Towards Robotic Feeding: Role of Haptics in Fork-based Food Manipulation," 2019 IEEE International Conference on Robotics and Automation and IEEE Robotics and Automation Letters (RA-L). [pdf] [video] [media]

[J-2] T. Bhattacharjee, H. Song, G. Lee, S. S. Srinivasa, “A Dataset of Food Manipulation Strategies,” International Journal of Robotics Research, 2018. [pdf]

[D-1] T. Bhattacharjee, H. Song, G. Lee, and S. S. Srinivasa, “A Dataset of Food Manipulation Strategies,” 2018. [Online]. Available: https://doi.org/10.7910/DVN/8TTXZ7

Motivation

Feeding is a particularly high-impact task because approximately 12.3 million people need assistance with this activity of daily living.

Purpose

Food manipulation project is for making a robot feed upper extremity-impaired people.

Approach

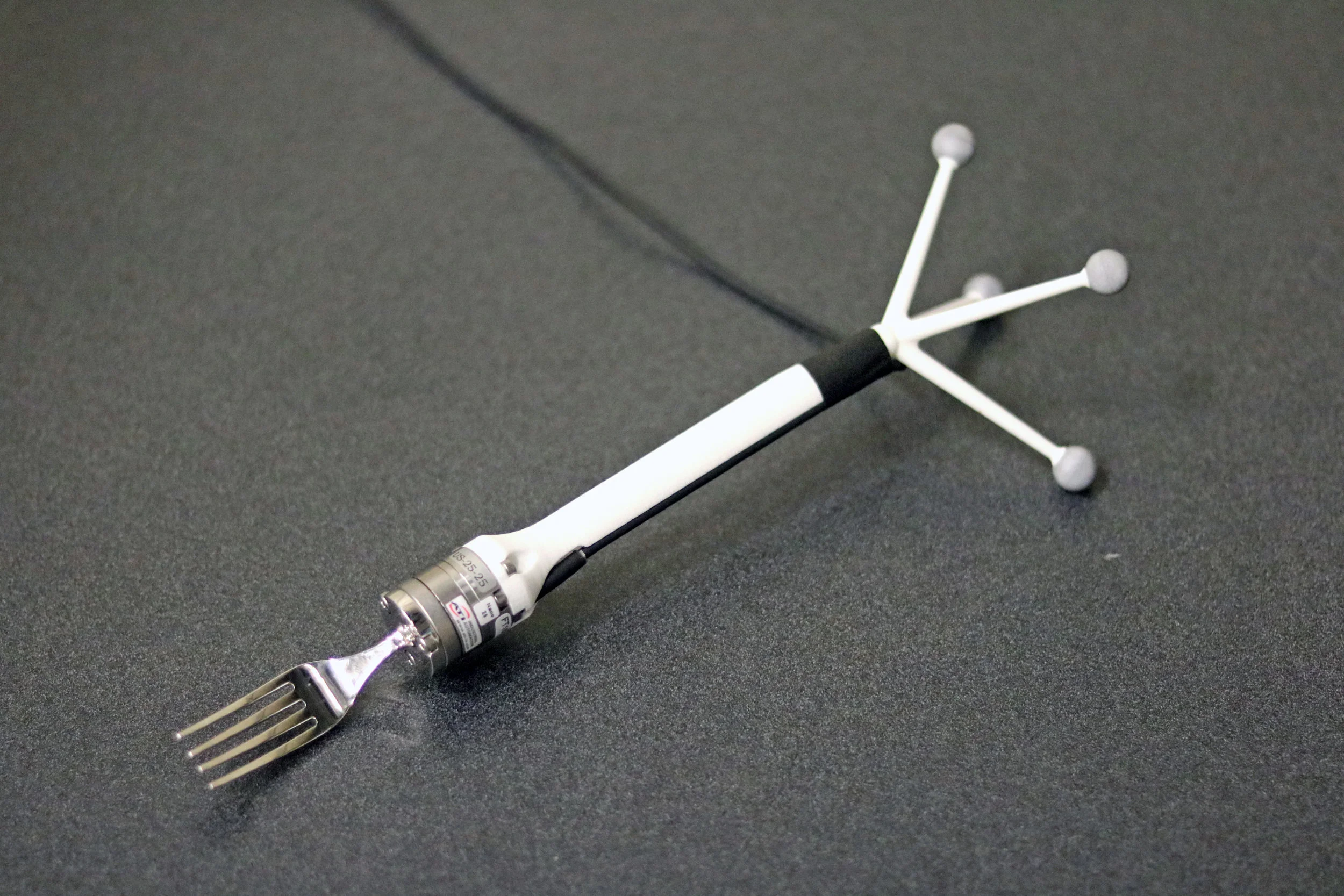

Forque: Fork that is able to sense force and torque

Experiment Setup

Design an experiments for human subjects to collect the haptic and motion data of the forque, in which a force/torque sensor and motion capture markers are embedded, when the human subject is forking foods.

With this data, a robot will learn how to classify and fork food items based on haptic feedback and visual perception.